Ollama LLM Benchmark Suite

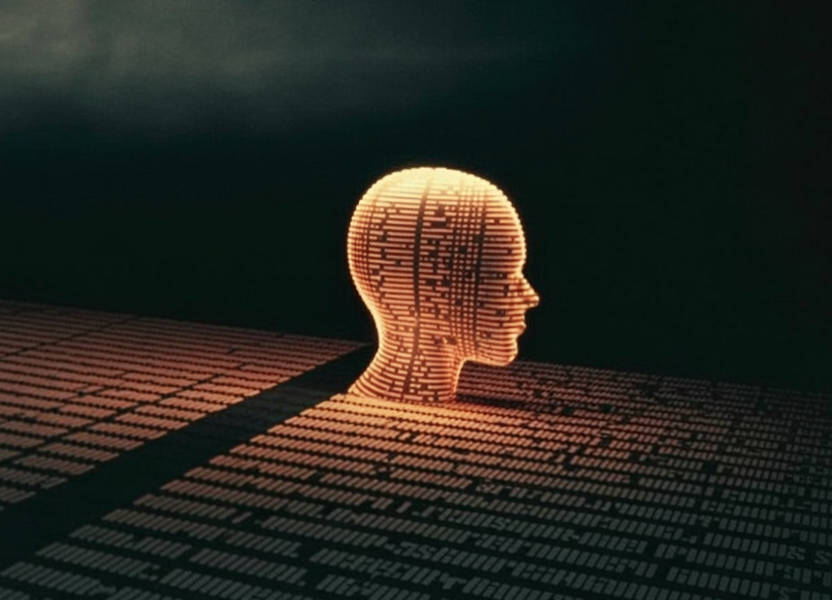

A lightweight Python-based benchmarking suite to evaluate and compare multiple Large Language Models (LLMs) locally using Ollama.

This tool measures model speed, latency, token generation performance, resource usage, and response quality, ranking models to help you select the best LLM for your hardware.

Python

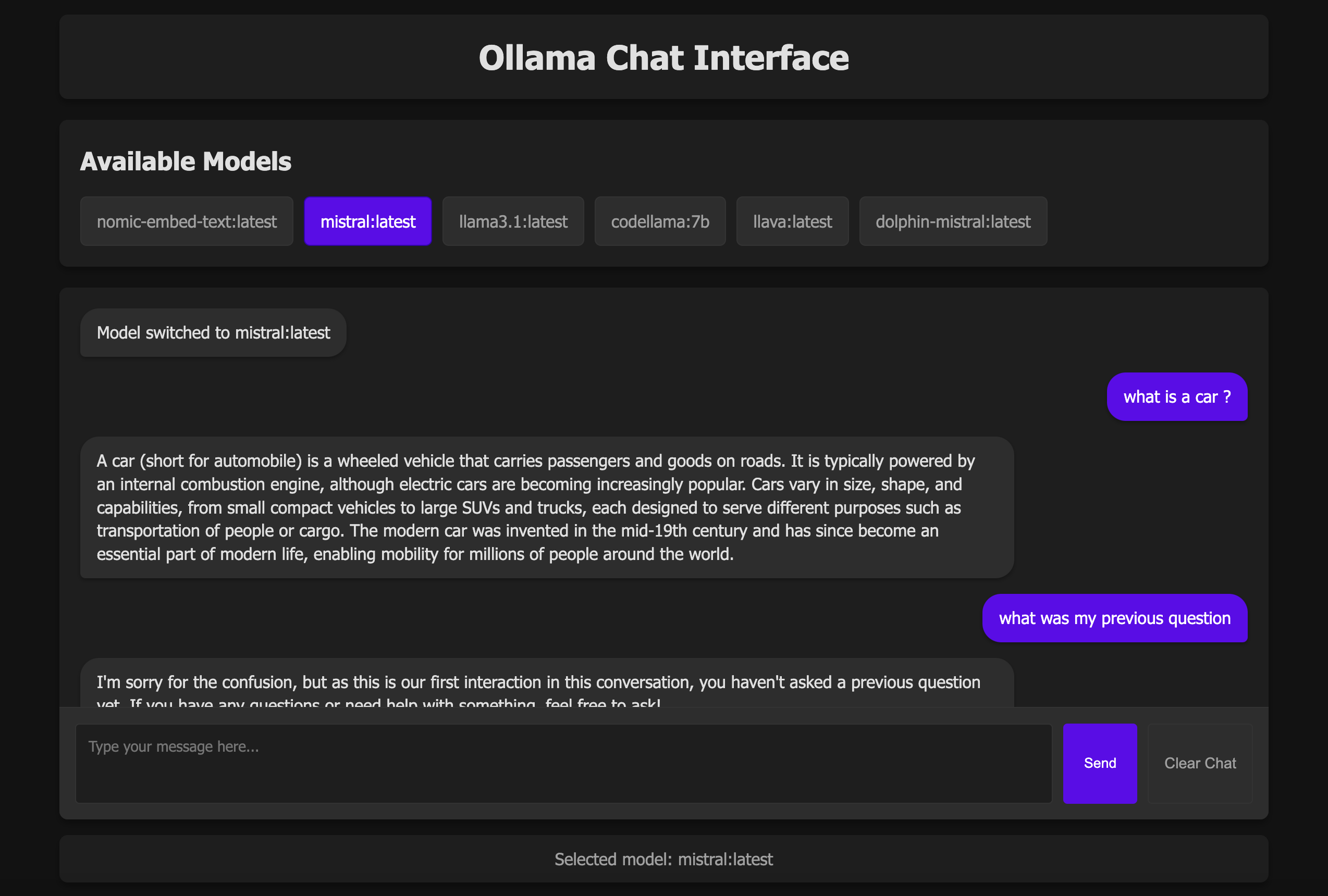

Ollama

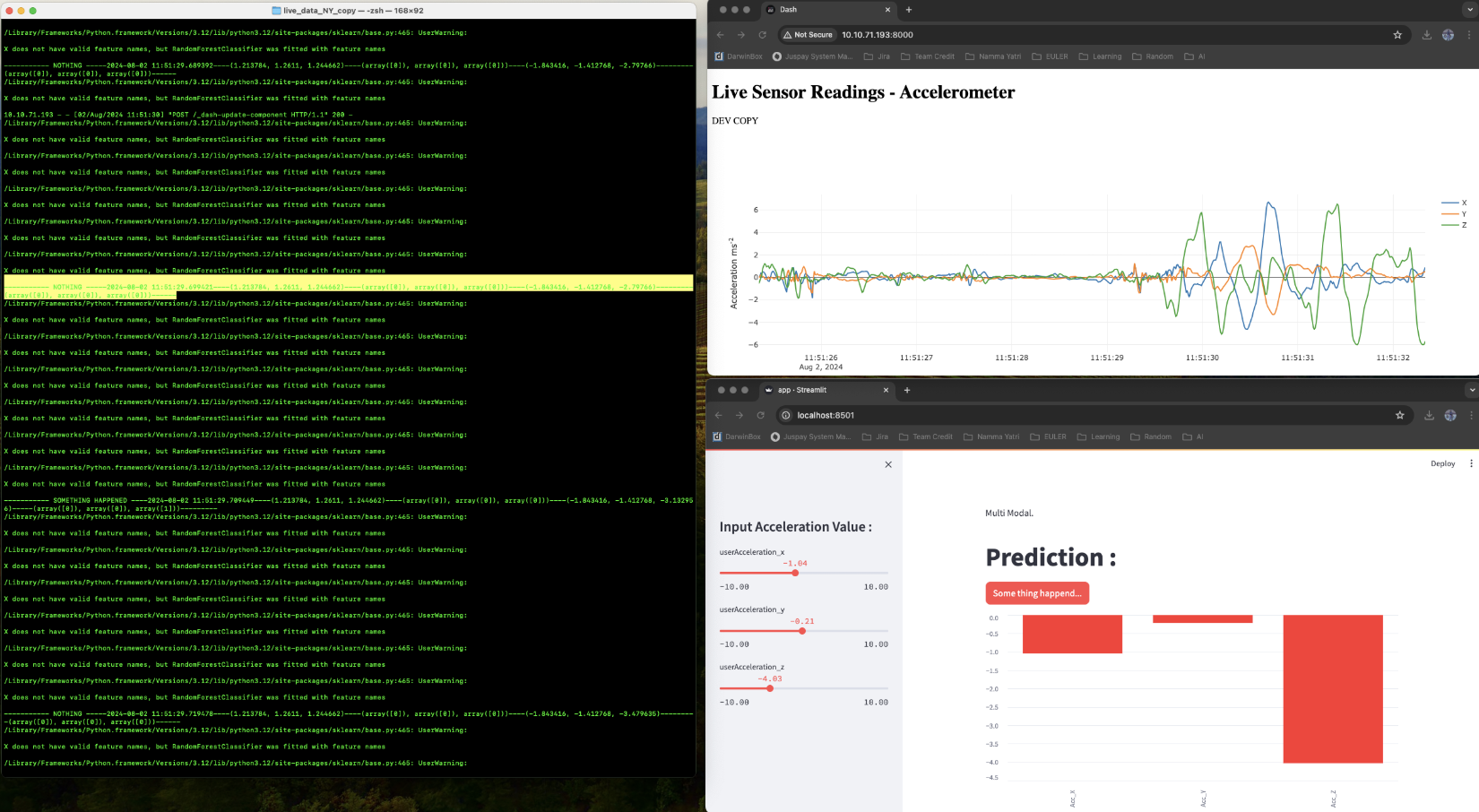

Benchmarking

Streamlit

LLM

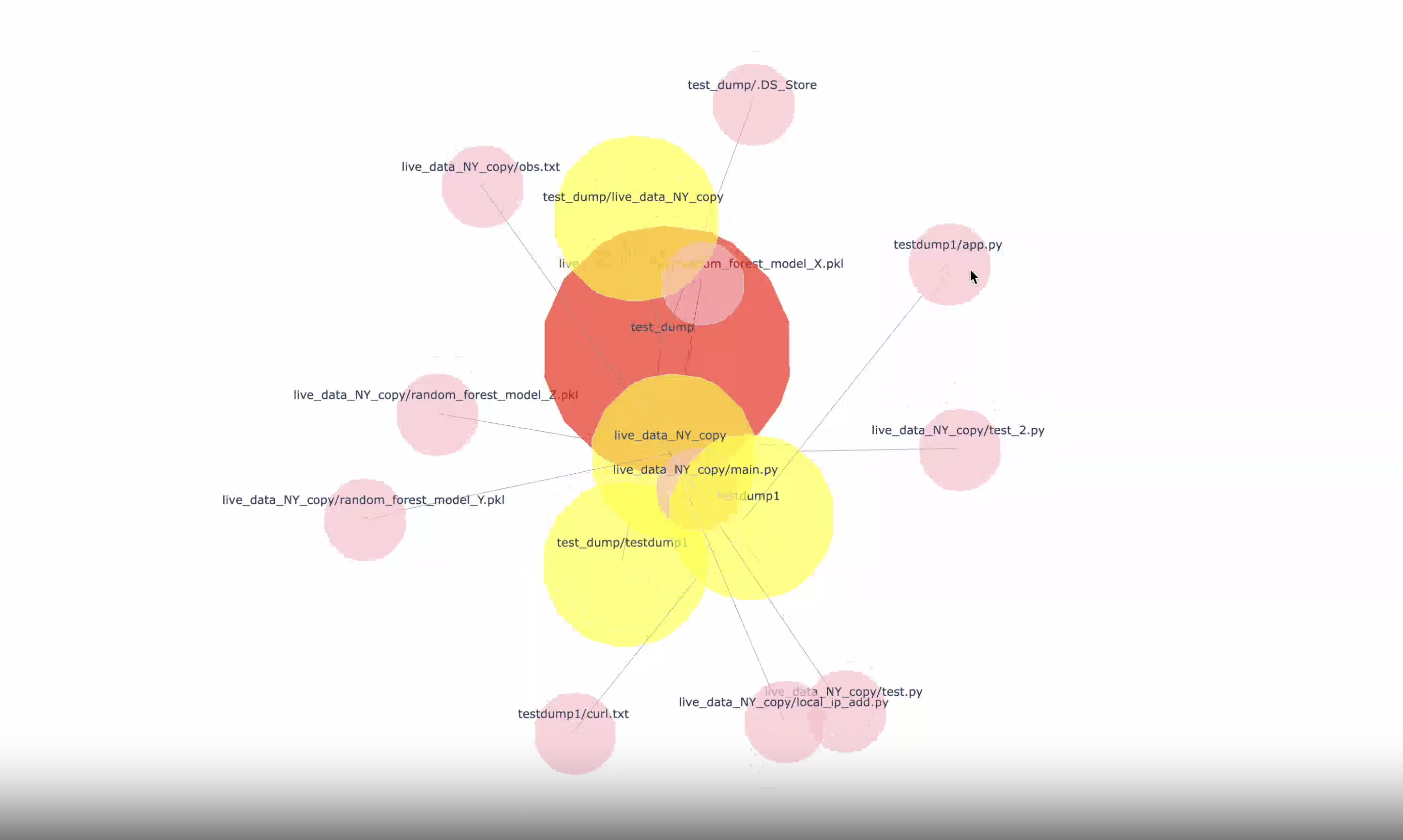

ScoreEngine

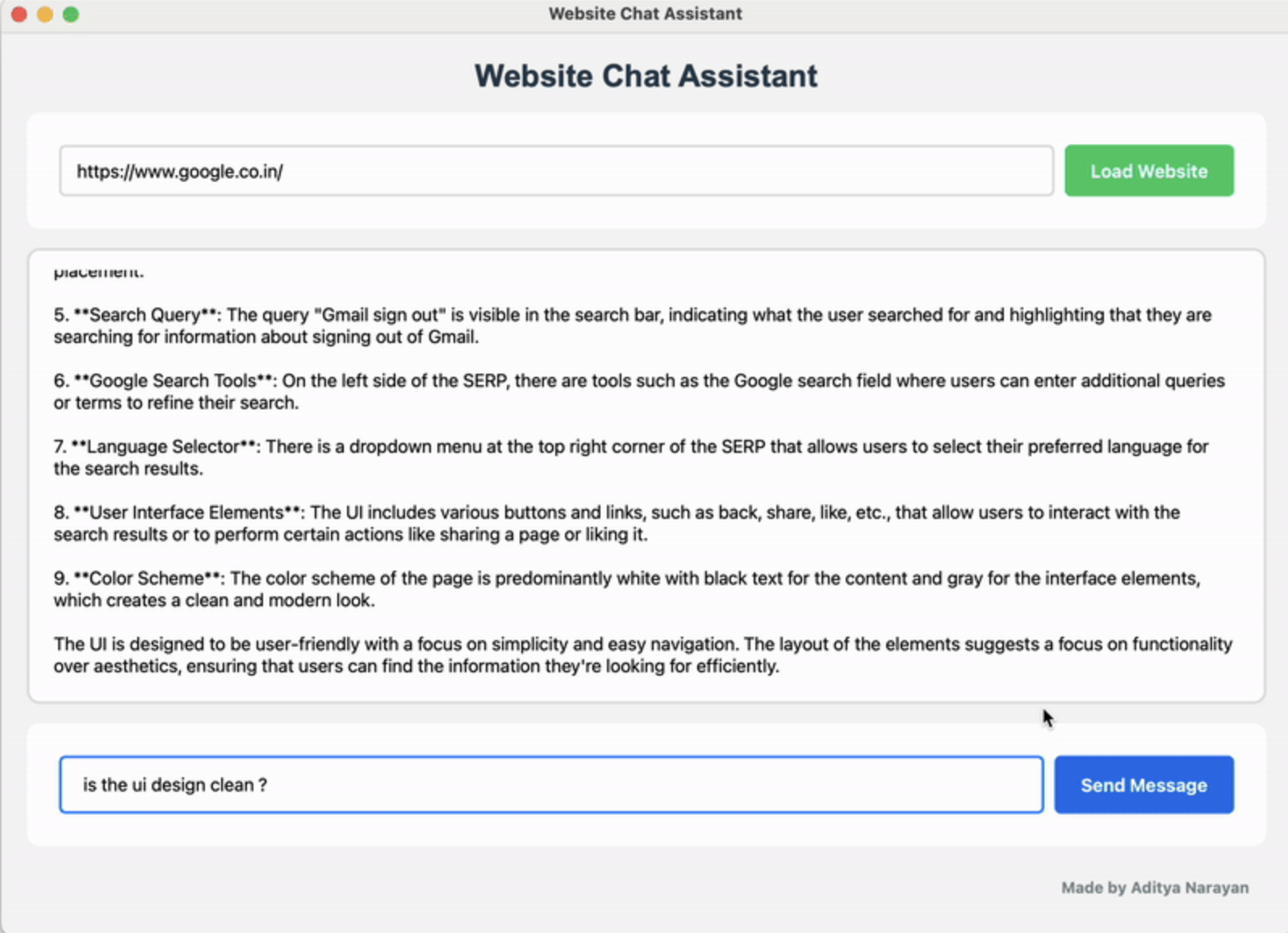

APIs

AI / ML